Concept Learning in Machine Learning – 17CS73

The problem of inducing general functions from specific training examples is central to learning.

Concept learning can be formulated as a problem of searching through a predefined space of potential hypotheses for the hypothesis that best fits the training examples.

What is Concept Learning…?

“A task of acquiring potential hypothesis (solution) that best fits the given training examples.”

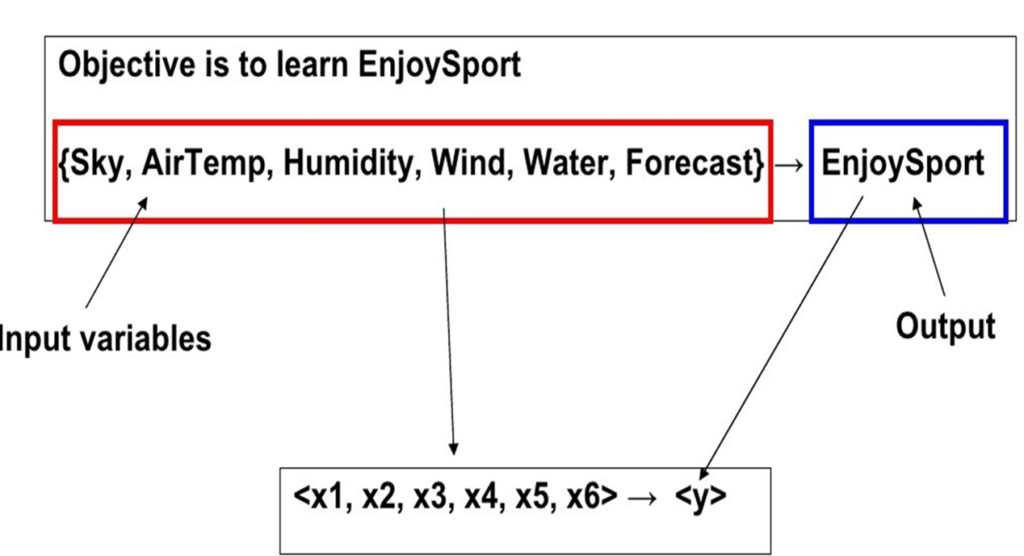

Consider the example task of learning the target concept “days on which my friend Prabhas enjoys his favorite water sport.”

Below Table describes a set of example days, each represented by a set of attributes. The attribute EnjoySport indicates whether or not Prabhas enjoys his favorite water sport on this day. The task is to learn to predict the value of EnjoySport for an arbitrary day, based on the values of its other attributes.

What hypothesis representation shall we provide to the learner in this case?

Let us begin by considering a simple representation in which each hypothesis consists of a conjunction of constraints on the instance attributes.

In particular, let each hypothesis be a vector of six constraints, specifying the values of the six attributes Sky, AirTemp, Humidity, Wind, Water, and Forecast.

For each attribute, the hypothesis will either

- indicate by a “?’ that any value is acceptable for this attribute,

- specify a single required value (e.g., Warm) for the attribute, or

- indicate by a “ø” that no value is acceptable.

If some instance x satisfies all the constraints of hypothesis h, then h classifies x as a positive example (h(x) = 1).

To illustrate, the hypothesis that Prabhas enjoys his favorite sport only on cold days with high humidity (independent of the values of the other attributes) is represented by the expression

(?, Cold, High, ?, ?, ?)

Most General and Specific Hypothesis

The most general hypothesis-that every day is a positive example-is represented by

(?, ?, ?, ?, ?, ?)

and the most specific possible hypothesis-that no day is a positive example-is represented by

(ø, ø, ø, ø, ø, ø)

A CONCEPT LEARNING TASK – Search

Concept learning can be viewed as the task of searching through a large space of hypotheses implicitly defined by the hypothesis representation.

The goal of this search is to find the hypothesis that best fits the training examples.

It is important to note that by selecting a hypothesis representation, the designer of the learning algorithm implicitly defines the space of all hypotheses that the program can ever represent and therefore can ever learn.

Instance Space

Consider, for example, the instances X and hypotheses H in the EnjoySport learning task.

Given that the attribute Sky has three possible values, and that AirTemp, Humidity, Wind, Water, and Forecast each have two possible values, the instance space X contains exactly 3 . 2 . 2 . 2 . 2 . 2 = 96 distinct instances.

Example:

Let’s assume there are two features F1 and F2 with F1 has A and B as possibilities and F2 as X and Y as possibilities.

F1 – > A, B

F2 – > X, Y

Instance Space: (A, X), (A, Y), (B, X), (B, Y) – 4 Examples

Hypothesis Space: (A, X), (A, Y), (A, ø), (A, ?), (B, X), (B, Y), (B, ø), (B, ?), (ø, X), (ø, Y), (ø, ø), (ø, ?), (?, X), (?, Y), (?, ø), (?, ?) – 16

Hypothesis Space: (A, X), (A, Y), (A, ?), (B, X), (B, Y), (B, ?), (?, X), (?, Y (?, ?) – 10

Hypothesis Space

Similarly there are 5 . 4 . 4 . 4 . 4 . 4 = 5120 syntactically distinct hypotheses within H.

Notice, however, that every hypothesis containing one or more “ø” symbols represents the empty set of instances; that is, it classifies every instance as negative.

Therefore, the number of semantically distinct hypotheses is only 1 + (4 . 3 . 3 . 3 . 3 . 3) = 973.

Our EnjoySport example is a very simple learning task, with a relatively small, finite hypothesis space.

General-to-Specific Ordering of Hypotheses

To illustrate the general-to-specific ordering, consider the two hypotheses

h1 = (Sunny, ?, ?, Strong, ?, ?)

h2 = (Sunny, ?, ?, ?, ?, ?)

Now consider the sets of instances that are classified positive by hl and by h2. Because h2 imposes fewer constraints on the instance, it classifies more instances as positive.

In fact, any instance classified positive by h1 will also be classified positive by h2. Therefore, we say that h2 is more general than h1.

For any instance x in X and hypothesis h in H, we say that x satisjies h if and only if h(x) = 1.

We define the more_general_than_or_equale_to relation in terms of the sets of instances that satisfy the two hypotheses.

Summary

This tutorial discusses the Concept Learning Tasks in Machine Learning. If you like the tutorial share with your friends. Like the Facebook page for regular updates and YouTube channel for video tutorials.

A very pleasant and comprehensible article.